WildCap: Autonomous Non-Invasive Monitoring

of Animal Behavior and Motion

Project Goal: Continuous, accurate and on-board inference of behavior, pose and shape of animals from multiple, unsynchronized and close-range aerial images acquired in the animal's natural habitat, without any sensors or markers on the animals.

Videos of Latest Results (Scroll to bottom for more video results)

Our autonomous tracking and following methods enabling a multi-rotor drone to track various species at the Mpala conservancy in April 2025.

Our cooperative tracking and following methods enabling two multi-rotor drones to track various species at the Mpala conservancy in April 2025.

Objective 1: Development of novel aerial platforms for tracking and following animals

Autonomous Systems for Non-Invasive Monitoring of Animal Behavior and Motion [8, 10]

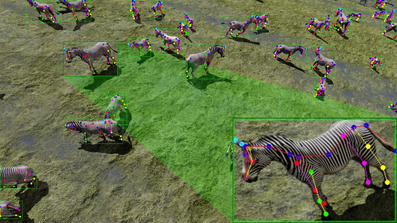

Objective 2: Novel methods for animal behavior, pose and shape estimation

A Framework for Fast, Large-scale, Semi-Automatic Inference of Animal Behavior from Monocular Videos [1,4]

Objective 3: Autonomous control methods for multiple aerial robots to maximize the accuracy of animal behavior, pose and shape inference

Perception-driven Formation control of Airships [2]

Reinforcement Learning-based Airship Control [5,6]

Reinforcement Learning-based Autonomous Landing on Moving Platforms [9]

Videos of WildCap's Results

Project Description

Overview: Automatically inferring animal behavior at scale - such as detecting whether they are standing, grazing, or interacting with their environment - is crucial for addressing key ecological challenges. Achieving this across large spatial and temporal scales remains a fundamental goal in wildlife research. Additionally, real-time estimation of an animal’s 3D pose and shape can aid in disease diagnosis, health profiling, and high-resolution behavior inference. However, conducting behavior analysis and pose estimation in the wild, without relying on physical markers or body-mounted sensors, presents a significant challenge. Current state-of-the-art methods often require GPS collars, IMU tags, or stationary camera traps, which are difficult to scale across vast landscapes and pose risks to animals. In WildCap (2021-2026), a project funded by Cyber Valley in Germany, we have developed autonomous systems to estimate the behavior, pose, and shape of endangered wild animals without physical interference. We introduce vision-based aerial robots that detect, track, and follow animals using novel control methods while performing behavior, pose, and shape estimation. To enhance vision-based inference, we developed synthetic data generation pipelines and validated these methods in extensive field missions and experiments.

Goal: Continuous, accurate and on-board inference of behavior, pose and shape of animals from multiple, unsynchronized and close-range aerial images acquired in the animal's natural habitat, without any sensors or markers on the animals.

Objectives:

- Development of novel aerial platforms for tracking and following animals

- Novel methods for animal behavior, pose and shape estimation

- Autonomous control methods for multiple aerial robots to maximize

the accuracy of animal behavior, pose and shape inference

Project Publications

- Price, E., Khandelwal P. C., Rubenstein D. I., & Ahmad, A (2025). A Framework for Fast, Large-scale, Semi-Automatic Inference of Animal Behavior from Monocular Videos, Methods in Ecology and Evolution (Wiley) (Accepted, doi awaited); BioArXiv version (2023): https://doi.org/10.1101/2023.07.31.551177

- [Award Paper] Price, E., Black, M., & Ahmad, A. (2023) Viewpoint-driven Formation Control of Airships for Cooperative Target Tracking, IEEE Robotics and Automation Letters, vol. 8, no. 6, pp. 3653-3660, June 2023. doi: https://doi.org/10.1109/LRA.2023.3264727 (Best Faculty Paper Award 2023: Best paper in 2023 from the Faculty of Aerospace Engineering and Geodesy at the University of Stuttgart)

- Bonetto, E. & Ahmad, A. (2023) Synthetic Data-based Detection of Zebras in Drone Imagery, IEEE European Conference on Mobile Robots (ECMR 2023), 1-8 . https://doi.org/10.1109/ECMR59166.2023.10256293

- Price, E. & Ahmad, A. (2023) Accelerated Video Annotation driven by Deep Detector and Tracker, 18th International Conference on Intelligent Autonomous Systems (IAS 18). https://link.springer.com/chapter/10.1007/978-3-031-44981-9_12

- Zuo, Y., Liu, Y. T. and Ahmad A. (2023), Autonomous Blimp Control via H-infinity Robust Deep Residual Reinforcement Learning, 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE 2023), Auckland, New Zealand, 2023, pp. 1-8. https://doi.org/10.1109/CASE56687.2023.10260561

- Liu, Y. T., Price, E., Black, M.J., Ahmad, A. (2022) Deep Residual Reinforcement Learning based Autonomous Blimp Control, 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 2022, pp. 12566-12573, 2022. https://doi.org/10.1109/IROS47612.2022.9981182

- Saini, N., Bonetto, E., Price, E., Ahmad, A., & Black, M. J. (2022). AirPose: Multi-View Fusion Network for Aerial 3D Human Pose and Shape Estimation, IEEE Robotics and Automation Letters, 7(2), 4805–4812. https://doi.org/10.1109/LRA.2022.3145494

- Price, E., Liu, Y. T., Black, M. J., & Ahmad, A. (2022). Simulation and Control of Deformable Autonomous Airships in Turbulent Wind. In: Ang Jr, M.H., Asama, H., Lin, W., Foong, S. (eds) Intelligent Autonomous Systems 16. IAS 2021. Lecture Notes in Networks and Systems, vol 412. Springer, Cham. https://doi.org/10.1007/978-3-030-95892-3_46

- Goldschmid, P. & Ahmad, A. (2024) Reinforcement learning based autonomous multi-rotor landing on moving platforms, Autonomous Robots, Springer. vol. 48, no. 13, June 2024. doi: https://doi.org/10.1007/s10514-024-10162-8

- [Award Paper] Price, E. & Ahmad, A. (2024) Airship Formations for Animal Motion Capture and Behavior Analysis, In 2nd International Conference on Design and Engineering of Lighter-Than-Air systems (DELTAs 2024), Mumbai, India. Preprint: https://arxiv.org/abs/2404.08986 (Awarded the 'Best Paper Award' for papers presented on Day 2 of the conference).

- Bonetto, E. & Ahmad, A. (2025) GRADE: Generating Realistic Animated Dynamic Environments for Robotics Research with Isaac Sim, The International Journal of Robotics Research (IJRR), 2025;0(0). doi:10.1177/02783649251346211, April 2025.